📊 The Ethics of Autonomous Finance: Who’s Accountable When AI Makes a Mistake?

Autonomous finance—the use of AI to make financial decisions without human input—is rapidly becoming the norm. From AI managing portfolios to approving loans and flagging fraud, these systems are optimizing money like never before.

But with great automation comes a critical ethical question:

Who’s accountable when AI makes a financial mistake?

As we hand over more responsibility to machines, the need for clear ethical, legal, and operational frameworks becomes urgent. In this article, we’ll examine the rising concerns around accountability, fairness, transparency, and trust in the age of autonomous finance.

🤖 When Finance Goes Autonomous

Autonomous financial systems can:

- Approve or deny loan applications

- Execute trades worth millions

- Detect and block payments suspected of fraud

- Advise on tax strategies

- Adjust insurance pricing in real time

These decisions can impact lives, livelihoods, and markets. So when something goes wrong—who is responsible?

⚠️ Real-World Ethical Dilemmas

1. Bias in Lending Decisions

If an AI denies a mortgage to a qualified applicant based on biased training data, is the developer, the bank, or the AI at fault?

2. Trading Algorithm Malfunctions

If an autonomous trading agent crashes a portfolio due to a data error, who is liable—especially if it acted within its programmed logic?

3. False Fraud Flags

If an AI mistakenly blocks a legitimate transaction (like paying rent or tuition), what recourse does the customer have? Who apologizes? Who pays?

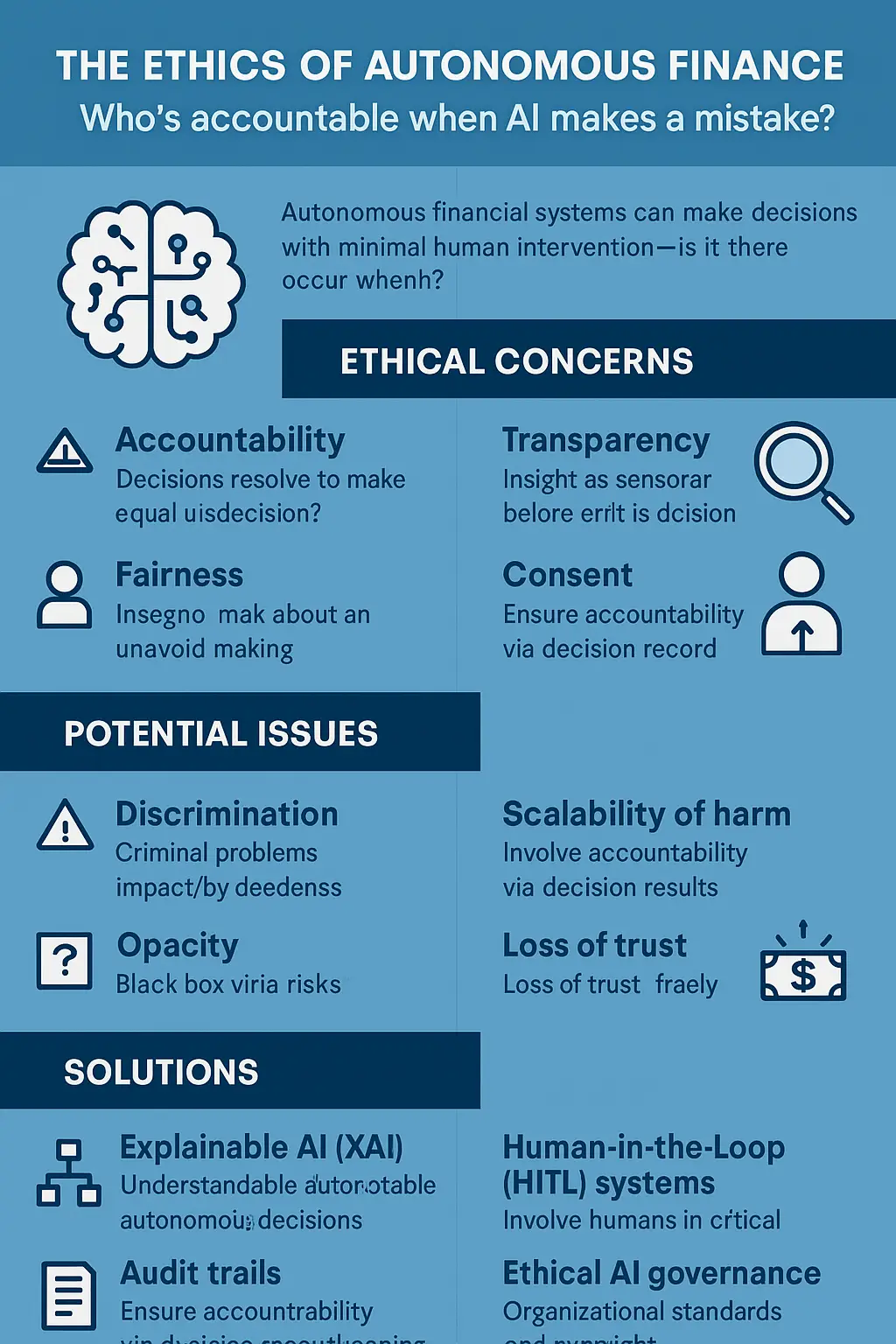

🧭 Key Ethical Questions

| Ethical Principle | The Dilemma |

|---|---|

| Accountability | Who is responsible when AI makes a bad decision? |

| Transparency | Can customers understand how the AI made its choice? |

| Fairness | Does the system treat all users equitably, regardless of background or data gaps? |

| Consent | Are users aware their financial lives are being guided by algorithms? |

| Redress | Is there a way to appeal or override the AI’s decision? |

🛠️ What Can Go Wrong Without Oversight

- Discrimination: AI may learn historical bias (e.g. racial disparities in credit scoring).

- Opacity: “Black box” models make decisions that even developers can’t fully explain.

- Scalability of harm: A flawed rule in one system can affect millions of people simultaneously.

- Loss of trust: One bad AI decision can erode a company’s reputation overnight.

🔍 Solutions Emerging in 2025

1. Explainable AI (XAI)

Financial institutions are adopting models that show the logic behind every decision, especially in regulated areas like lending or wealth management.

2. Human-in-the-Loop (HITL) Systems

These systems keep humans involved in high-stakes decisions—especially when rejecting loans, freezing accounts, or altering tax positions.

3. Audit Trails for AI Decisions

Just like human financial advisors keep records, autonomous systems must log every action for post-incident reviews.

4. Ethical AI Governance Boards

Forward-thinking institutions are establishing internal ethics committees to review AI policies and ensure compliance with evolving regulations.

⚖️ Who’s Ultimately Accountable?

It’s not the AI—it’s the institution that deploys it.

Whether it’s a fintech startup or a global bank, responsibility lies with the organization to:

- Test and validate their models

- Provide clear disclosure to users

- Create systems of accountability

- Ensure AI reflects company values, not just efficiency

“Autonomous doesn’t mean unaccountable. It means someone programmed, deployed, and trusted the system—so someone must answer when it fails.”

🧠 Final Thoughts

Autonomous finance holds immense potential to democratize money, reduce costs, and improve outcomes. But we must ensure that the pursuit of speed and scale doesn’t override ethics and responsibility.

The future of finance is being built now—and how we answer these ethical questions today will define trust for decades to come.