Introduction

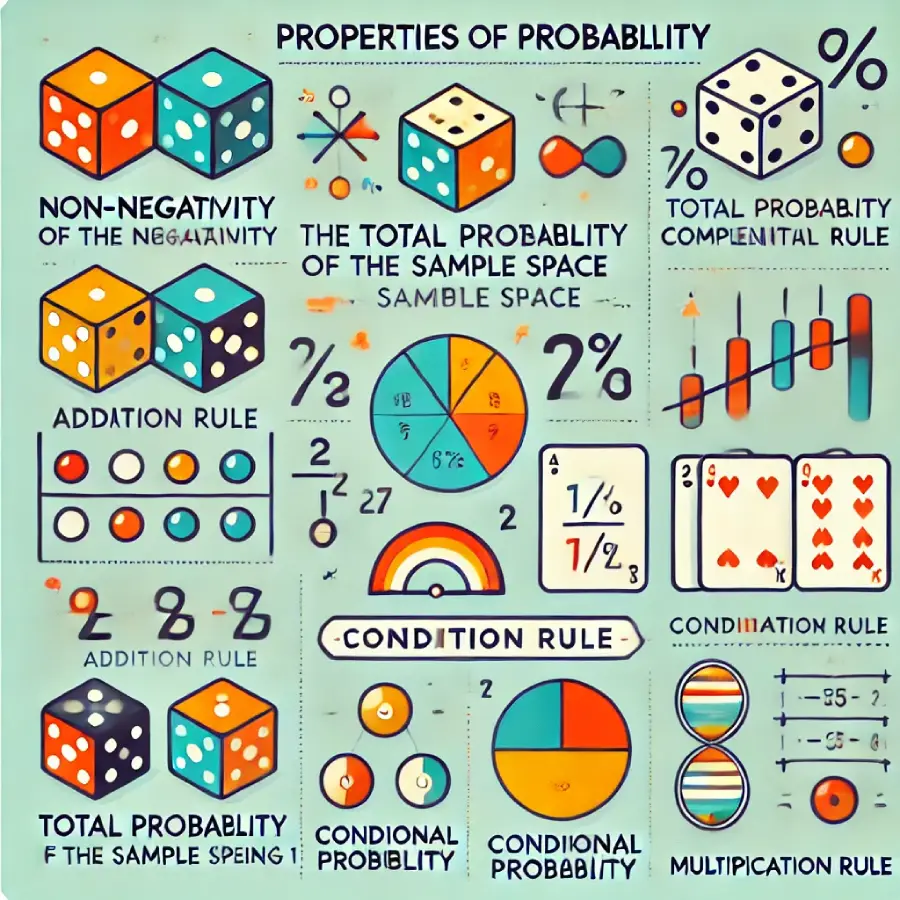

Probability theory provides a framework for quantifying uncertainty and randomness in various processes. At the core of this theory are fundamental properties that govern how probabilities behave in relation to events. Understanding these properties is essential for analyzing real-world situations, making predictions, and conducting statistical analysis. This article explores the key properties of probability, providing definitions, explanations, and real-life examples for each.

—

1. Non-Negativity Property

Definition:

The probability of any event \( A \) is always a non-negative number. In other words, for any event \( A \) in the sample space \( S \), the probability is:

\[

P(A) \geq 0

\]

No event can have a negative probability since probability represents the likelihood of an event occurring, which can never be less than zero.

Real-Life Example:

Weather Forecasting: If a meteorologist predicts a 20% chance of rain, this means the probability of rain is 0.2. However, predicting a negative probability of rain (e.g., -0.1) makes no sense. Whether it is a 0% chance (no rain) or a higher chance, probabilities are always non-negative.

—

2. The Total Probability of the Sample Space is 1

Definition:

The sum of the probabilities of all possible outcomes in the sample space \( S \) must equal 1:

\[

P(S) = 1

\]

This reflects the certainty that one of the possible outcomes in the sample space will occur.

Real-Life Example:

Rolling a Die: If you roll a fair six-sided die, the possible outcomes are \( \{1, 2, 3, 4, 5, 6\} \). The probability of each number is \( \frac{1}{6} \). Summing these probabilities gives:

\[

P(1) + P(2) + P(3) + P(4) + P(5) + P(6) = \frac{1}{6} + \frac{1}{6} + \frac{1}{6} + \frac{1}{6} + \frac{1}{6} + \frac{1}{6} = 1

\]

This reflects that one of these outcomes must occur.

—

3. Additivity (or the Addition Rule)

Definition:

For any two mutually exclusive (disjoint) events \( A \) and \( B \) (i.e., events that cannot happen simultaneously), the probability of either event occurring is the sum of their individual probabilities:

\[

P(A \cup B) = P(A) + P(B) \quad \text{if } A \cap B = \emptyset

\]

Real-Life Example:

Drawing a Card from a Deck: In a deck of cards, suppose you want to know the probability of drawing either a king or a queen. Since it’s impossible to draw both a king and a queen in the same draw (mutually exclusive events), the probability is the sum of the individual probabilities:

\[

P(\text{King}) + P(\text{Queen}) = \frac{4}{52} + \frac{4}{52} = \frac{8}{52} = \frac{2}{13}

\]

—

4. Complementary Rule

Definition:

The probability of the complement of an event \( A \) (i.e., the event that \( A \) does not occur), denoted as \( A^c \), is:

\[

P(A^c) = 1 – P(A)

\]

This property reflects that either event \( A \) will happen or it will not.

Real-Life Example:

Flight Arrival: Suppose the probability that a particular flight will arrive on time is 0.85. The probability that the flight will be late is the complement of the probability of being on time:

\[

P(\text{Late}) = 1 – P(\text{On Time}) = 1 – 0.85 = 0.15

\]

So, there is a 15% chance the flight will be late.

—

5. Subadditivity

Definition:

For any events \( A_1, A_2, \dots, A_n \), the probability of their union is less than or equal to the sum of their individual probabilities:

\[

P(A_1 \cup A_2 \cup \dots \cup A_n) \leq P(A_1) + P(A_2) + \dots + P(A_n)

\]

This property accounts for the fact that when events overlap, the probability of their union must not exceed the sum of their individual probabilities.

Real-Life Example:

Risk in Insurance: In insurance, suppose you want to know the probability that a car will either be involved in an accident, experience theft, or suffer weather-related damage. Since these events may overlap (e.g., an accident caused by bad weather), the probability of any of these events happening is less than the sum of the individual event probabilities.

—

6. Conditional Probability

Definition:

The probability of an event \( A \), given that another event \( B \) has occurred, is called the conditional probability of \( A \) given \( B \), denoted \( P(A \mid B) \). It is calculated as:

\[

P(A \mid B) = \frac{P(A \cap B)}{P(B)} \quad \text{for } P(B) > 0

\]

Real-Life Example:

Medical Testing: Suppose 2% of a population has a certain disease, and a test correctly identifies the disease 95% of the time (true positive rate). If a person tests positive, the probability they actually have the disease depends on the conditional probability, which accounts for both the test accuracy and the base rate of the disease in the population.

—

7. Multiplication Rule (Product Rule)

Definition:

For any two events \( A \) and \( B \), the probability that both \( A \) and \( B \) occur (their intersection) is:

\[

P(A \cap B) = P(A \mid B) P(B) = P(B \mid A) P(A)

\]

If \( A \) and \( B \) are independent events (i.e., the occurrence of one does not affect the other), the formula simplifies to:

\[

P(A \cap B) = P(A) P(B)

\]

Real-Life Example:

Tossing Coins: If you flip two coins, the probability of getting heads on both flips is:

\[

P(\text{Heads on 1st coin}) \times P(\text{Heads on 2nd coin}) = 0.5 \times 0.5 = 0.25

\]

Thus, there is a 25% chance of flipping two heads.

—

8. Law of Total Probability

Definition:

If \( B_1, B_2, \dots, B_n \) are mutually exclusive and exhaustive events (they cover all possible outcomes), then for any event \( A \), the probability of \( A \) can be written as:

\[

P(A) = \sum_{i=1}^{n} P(A \mid B_i) P(B_i)

\]

This law helps in situations where we calculate the probability of an event by considering all the different ways it can occur.

Real-Life Example:

Marketing Campaigns: Suppose you’re running a marketing campaign targeting different demographic groups, each of which has a different probability of responding. The total probability of a response can be calculated using the law of total probability by summing over all demographic groups, weighted by the probability of targeting each group.

—

Conclusion

The properties of probability provide the foundation for understanding how likelihoods behave in various situations. By applying these principles, we can solve complex problems in fields like statistics, finance, insurance, and beyond. These properties ensure that probabilities remain consistent and logically sound, allowing us to make sense of uncertain events in the real world.