Credit Risk Models Explained: Techniques & Best Practices

Effective credit risk models are the backbone of sound lending decisions. By accurately predicting borrower default probabilities, financial institutions can optimize interest rates, set aside appropriate capital reserves, and reduce unexpected losses. In this post, we’ll explore the most common credit risk modeling approaches, their strengths and limitations, and how to implement them in your own risk framework.

Table of Contents

- What Are Credit Risk Models?

- Traditional Statistical Models

- Logistic Regression

- Discriminant Analysis

- Machine Learning Approaches

- Decision Trees & Random Forests

- Gradient Boosting Machines (GBM)

- Advanced Techniques

- Survival Analysis

- Neural Networks & Deep Learning

- Key Model Evaluation Metrics

- ROC Curve & AUC

- Gini Coefficient

- KS Statistic

- Implementation Best Practices

- Data Preparation & Feature Engineering

- Model Validation & Backtesting

- Regulatory Compliance

- Future Trends in Credit Risk Modeling

- Conclusion

What Are Credit Risk Models?

Credit risk models estimate the probability that a borrower will default on their obligations. They help banks and lenders to:

- Price loans appropriately based on default risk.

- Allocate capital under regulatory regimes like Basel II/III.

- Monitor portfolio health and trigger early-warning signals.

At their core, these models translate borrower and macroeconomic data into actionable risk scores.

Traditional Statistical Models

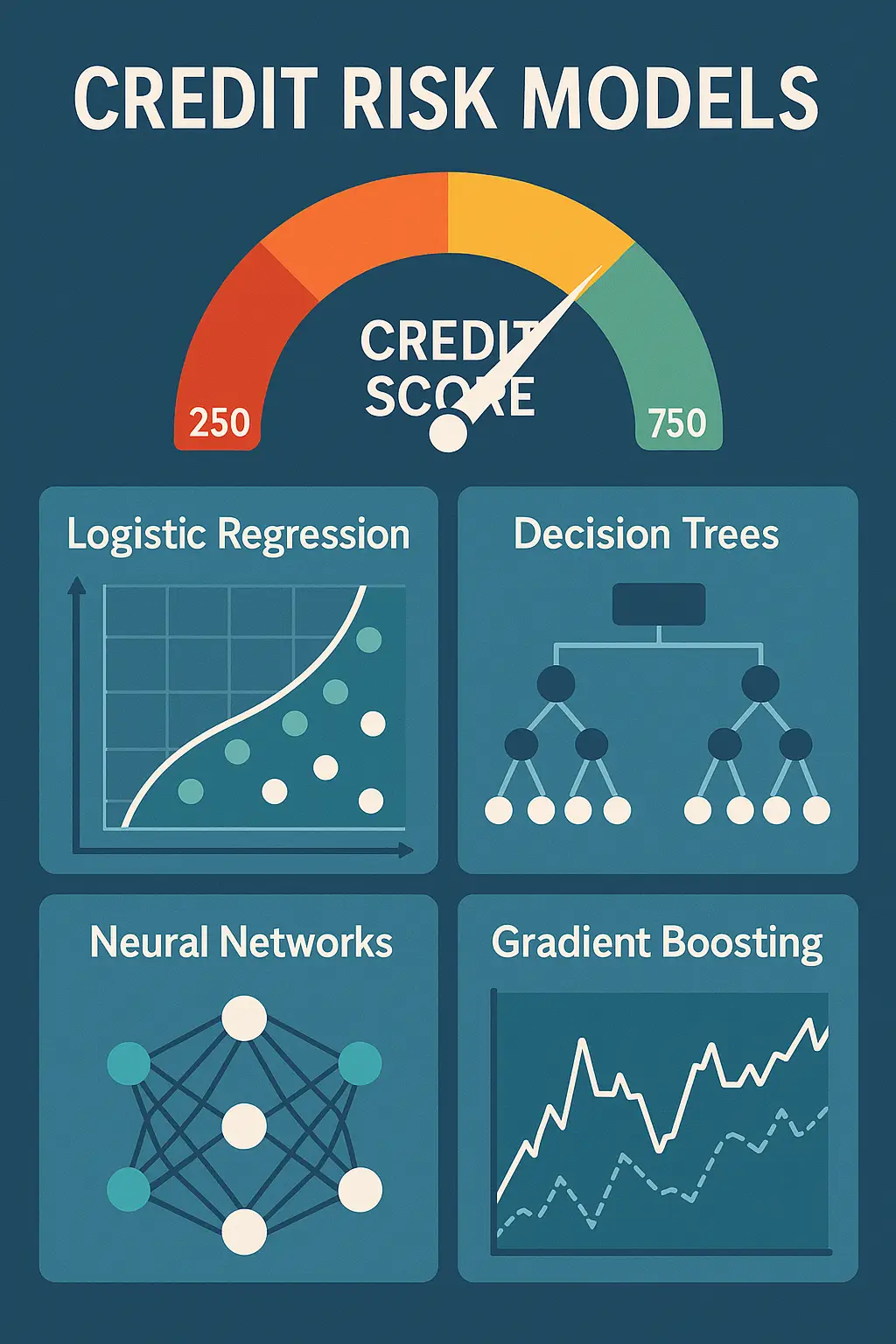

Logistic Regression

A workhorse of credit scoring, logistic regression models the log-odds of default as a linear combination of borrower features (e.g., income, credit history). Its advantages include interpretability and ease of implementation.

Discriminant Analysis

Linear Discriminant Analysis (LDA) projects data onto a line that best separates defaulters from non-defaulters. While less common today, it remains valuable when its assumptions (normality, equal covariance) hold.

Machine Learning Approaches

Decision Trees & Random Forests

- Decision Trees split borrower data by the most informative features (e.g., debt-to-income ratio).

- Random Forests ensemble multiple trees to reduce overfitting and improve stability.

Gradient Boosting Machines (GBM)

GBM algorithms like XGBoost and LightGBM build models sequentially, each new tree correcting the errors of its predecessor. They often outperform simpler methods but require careful tuning.

Advanced Techniques

Survival Analysis

Unlike binary classifiers, survival models (e.g., Cox Proportional Hazards) estimate time-to-default, offering richer insights into when a borrower might default.

Neural Networks & Deep Learning

Deep learning architectures can capture complex, non-linear relationships in large datasets. Techniques such as autoencoders also enable advanced feature learning for credit scoring.

Key Model Evaluation Metrics

- ROC Curve & AUC: Measures a model’s ability to discriminate between defaulters and non-defaulters.

- Gini Coefficient: A scaled version of AUC commonly used in credit risk.

- KS Statistic: The maximum difference between the cumulative distribution of good vs. bad borrowers—high KS indicates strong separation.

Implementation Best Practices

Data Preparation & Feature Engineering

- Clean and impute missing data (e.g., with median or k-NN imputation).

- Create ratio features (debt-to-income, loan-to-value).

- Incorporate behavioral data (credit utilization trends).

Model Validation & Backtesting

- Use out-of-time holdouts to guard against overfitting.

- Perform stress-testing under adverse economic scenarios.

Regulatory Compliance

- Ensure models meet Basel IV and IFRS 9 requirements for explainability and backtesting.

- Maintain audit trails and version control for every model iteration.

Future Trends in Credit Risk Modeling

- Explainable AI (XAI): Tools like SHAP and LIME make complex models transparent.

- Alternative Data Sources: Incorporating utility payments, rental history, and social data for under-banked segments.

- Federated Learning: Collaborative model-building across institutions without sharing raw data.

Conclusion

Building robust credit risk models combines statistical rigor, machine learning innovation, and strict governance. Whether you start with logistic regression or advance to deep learning, the key lies in clean data, thorough validation, and clear explainability. Implement these best practices to enhance lending decisions, reduce losses, and comply with evolving regulations.